Our project is done in close collaboration with the Technical University of Munich. In particular with the TUM Data Science in Earth Observation (Sipeo) group. The complete list of associated publications might be also interesting for you and is available here.

Publications

Publications

2021 |

|

Mandl, David; Peter M, ; Langlotz, Tobias; Ebner, Christoph; Mori, Shohei; Zollmann, Stefanie; Mohr, Peter; Kalkofen, Denis Neural Cameras: Learning Camera Characteristics for Coherent Mixed Reality Rendering Inproceedings 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 508–516, IEEE Publications, 2021, ISBN: 978-1-7281-9777-7, (null ; Conference date: 04-10-2021 Through 08-10-2021). Abstract | Links | BibTeX | Tags: @inproceedings{238e0aabb29b487b99c5315be5d8d2ed, title = {Neural Cameras: Learning Camera Characteristics for Coherent Mixed Reality Rendering}, author = {David Mandl and {Peter M } Roth and Tobias Langlotz and Christoph Ebner and Shohei Mori and Stefanie Zollmann and Peter Mohr and Denis Kalkofen}, doi = {10.1109/ISMAR52148.2021.00068}, isbn = {978-1-7281-9777-7}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)}, pages = {508--516}, publisher = {IEEE Publications}, abstract = {Coherent rendering is important for generating plausible Mixed Reality presentations of virtual objects within a usertextquoterights real-world environment. Besides photo-realistic rendering and correct lighting, visual coherence requires simulating the imaging system that is used to capture the real environment. While existing approaches either focus on a specific camera or a specific component of the imaging system, we introduce Neural Cameras, the first approach that jointly simulates all major components of an arbitrary modern camera using neural networks. Our system allows for adding new cameras to the framework by learning the visual properties from a database of images that has been captured using the physical camera. We present qualitative and quantitative results and discuss future direction for research that emerge from using Neural Cameras.}, note = {null ; Conference date: 04-10-2021 Through 08-10-2021}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Coherent rendering is important for generating plausible Mixed Reality presentations of virtual objects within a usertextquoterights real-world environment. Besides photo-realistic rendering and correct lighting, visual coherence requires simulating the imaging system that is used to capture the real environment. While existing approaches either focus on a specific camera or a specific component of the imaging system, we introduce Neural Cameras, the first approach that jointly simulates all major components of an arbitrary modern camera using neural networks. Our system allows for adding new cameras to the framework by learning the visual properties from a database of images that has been captured using the physical camera. We present qualitative and quantitative results and discuss future direction for research that emerge from using Neural Cameras. | |

Pepe, Antonio; Egger, Jan; Codari, Marina; Willemink, Martin J; Gsaxner, Christina; Li, Jianning; Roth, Peter M; Mistelbauer, Gabriel; Schmalstieg, Dieter; Fleischmann, Dominik 2021. @misc{https://doi.org/10.48550/arxiv.2111.11269, title = {Automated cross-sectional view selection in CT angiography of aortic dissections with uncertainty awareness and retrospective clinical annotations}, author = {Antonio Pepe and Jan Egger and Marina Codari and Martin J Willemink and Christina Gsaxner and Jianning Li and Peter M Roth and Gabriel Mistelbauer and Dieter Schmalstieg and Dominik Fleischmann}, url = {https://arxiv.org/abs/2111.11269}, doi = {10.48550/ARXIV.2111.11269}, year = {2021}, date = {2021-01-01}, publisher = {arXiv}, keywords = {}, pubstate = {published}, tppubtype = {misc} } | |

Zhu, Xiao Xiang; Montazeri, Sina; Ali, Mohsin; Hua, Yuansheng; Wang, Yuanyuan; Mou, Lichao; Shi, Yilei; Xu, Feng; Bamler, Richard Deep Learning Meets SAR: Concepts, models, pitfalls, and perspectives Journal Article IEEE Geoscience and Remote Sensing Magazine, 9 (4), pp. 143-172, 2021. @article{9351574, title = {Deep Learning Meets SAR: Concepts, models, pitfalls, and perspectives}, author = {Xiao Xiang Zhu and Sina Montazeri and Mohsin Ali and Yuansheng Hua and Yuanyuan Wang and Lichao Mou and Yilei Shi and Feng Xu and Richard Bamler}, doi = {10.1109/MGRS.2020.3046356}, year = {2021}, date = {2021-01-01}, journal = {IEEE Geoscience and Remote Sensing Magazine}, volume = {9}, number = {4}, pages = {143-172}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Lin, Jianzhe; Mou, Lichao; Zhu, Xiao Xiang; Ji, Xiangyang; Wang, Jane Z Attention-Aware Pseudo-3-D Convolutional Neural Network for Hyperspectral Image Classification Journal Article IEEE Transactions on Geoscience and Remote Sensing, 59 (9), pp. 7790-7802, 2021. @article{9347797, title = {Attention-Aware Pseudo-3-D Convolutional Neural Network for Hyperspectral Image Classification}, author = {Jianzhe Lin and Lichao Mou and Xiao Xiang Zhu and Xiangyang Ji and Jane Z Wang}, doi = {10.1109/TGRS.2020.3038212}, year = {2021}, date = {2021-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, volume = {59}, number = {9}, pages = {7790-7802}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Lin, Jianzhe; Yu, Tianze; Mou, Lichao; Zhu, Xiaoxiang; Ward, Rabab Kreidieh; Wang, Jane Z Unifying Top–Down Views by Task-Specific Domain Adaptation Journal Article IEEE Transactions on Geoscience and Remote Sensing, 59 (6), pp. 4689-4702, 2021. @article{9210589, title = {Unifying Top–Down Views by Task-Specific Domain Adaptation}, author = {Jianzhe Lin and Tianze Yu and Lichao Mou and Xiaoxiang Zhu and Rabab Kreidieh Ward and Jane Z Wang}, doi = {10.1109/TGRS.2020.3022608}, year = {2021}, date = {2021-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, volume = {59}, number = {6}, pages = {4689-4702}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Saha, Sudipan; Mou, Lichao; Zhu, Xiao Xiang; Bovolo, Francesca; Bruzzone, Lorenzo Semisupervised Change Detection Using Graph Convolutional Network Journal Article IEEE Geoscience and Remote Sensing Letters, 18 (4), pp. 607-611, 2021. @article{9069898, title = {Semisupervised Change Detection Using Graph Convolutional Network}, author = {Sudipan Saha and Lichao Mou and Xiao Xiang Zhu and Francesca Bovolo and Lorenzo Bruzzone}, doi = {10.1109/LGRS.2020.2985340}, year = {2021}, date = {2021-01-01}, journal = {IEEE Geoscience and Remote Sensing Letters}, volume = {18}, number = {4}, pages = {607-611}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Heidler, Konrad; Mou, Lichao; Zhu, Xiao Xiang Seeing the Bigger Picture: Enabling Large Context Windows in Neural Networks by Combining Multiple Zoom Levels Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 3033-3036, 2021. @inproceedings{9554434, title = {Seeing the Bigger Picture: Enabling Large Context Windows in Neural Networks by Combining Multiple Zoom Levels}, author = {Konrad Heidler and Lichao Mou and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9554434}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {3033-3036}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Heidler, Konrad; Mou, Lichao; Baumhoer, Celia; Dietz, Andreas; Zhu, Xiao Xiang Hed-Unet: A Multi-Scale Framework for Simultaneous Segmentation and Edge Detection Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 3037-3040, 2021. @inproceedings{9553585, title = {Hed-Unet: A Multi-Scale Framework for Simultaneous Segmentation and Edge Detection}, author = {Konrad Heidler and Lichao Mou and Celia Baumhoer and Andreas Dietz and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9553585}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {3037-3040}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Jin, Pu; Mou, Lichao; Xia, Gui-Song; Zhu, Xiao Xiang Anomaly Detection in Aerial Videos Via Future Frame Prediction Networks Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 8237-8240, 2021. @inproceedings{9554396, title = {Anomaly Detection in Aerial Videos Via Future Frame Prediction Networks}, author = {Pu Jin and Lichao Mou and Gui-Song Xia and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9554396}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {8237-8240}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Jin, Pu; Mou, Lichao; Hua, Yuansheng; Xia, Gui-Song; Zhu, Xiao Xiang Temporal Relations Matter: A Two-Pathway Network for Aerial Video Recognition Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 8221-8224, 2021. @inproceedings{9554868, title = {Temporal Relations Matter: A Two-Pathway Network for Aerial Video Recognition}, author = {Pu Jin and Lichao Mou and Yuansheng Hua and Gui-Song Xia and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9554868}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {8221-8224}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Chen, Sining; Mou, Lichao; Li, Qingyu; Sun, Yao; Zhu, Xiao Xiang Mask-Height R-CNN: An End-to-End Network for 3D Building Reconstruction from Monocular Remote Sensing Imagery Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 1202-1205, 2021. @inproceedings{9553121, title = {Mask-Height R-CNN: An End-to-End Network for 3D Building Reconstruction from Monocular Remote Sensing Imagery}, author = {Sining Chen and Lichao Mou and Qingyu Li and Yao Sun and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9553121}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {1202-1205}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Sun, Yao; Hua, Yuansheng; Mou, Lichao; Zhu, Xiao Xiang Conditional GIS-Aware Network for Individual Building Segmentation in a VHR SAR Image Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 4532-4535, 2021. @inproceedings{9553135, title = {Conditional GIS-Aware Network for Individual Building Segmentation in a VHR SAR Image}, author = {Yao Sun and Yuansheng Hua and Lichao Mou and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9553135}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {4532-4535}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Luo, Cong; Hua, Yuansheng; Mou, Lichao; Zhu, Xiao Xiang Improving Land Cover Classification with a Shift-Invariant Center-Focusing Convolutional Neural Network Inproceedings 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, pp. 2863-2866, 2021. @inproceedings{9554678, title = {Improving Land Cover Classification with a Shift-Invariant Center-Focusing Convolutional Neural Network}, author = {Cong Luo and Yuansheng Hua and Lichao Mou and Xiao Xiang Zhu}, doi = {10.1109/IGARSS47720.2021.9554678}, year = {2021}, date = {2021-01-01}, booktitle = {2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS}, pages = {2863-2866}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Abid, Nosheen; Shahzad, Muhammad; Malik, Muhammad Imran; Schwanecke, Ulrich; Ulges, Adrian; Kovács, György; Shafait, Faisal UCL: Unsupervised Curriculum Learning for water body classification from remote sensing imagery Journal Article International Journal of Applied Earth Observation and Geoinformation, 105 , pp. 102568, 2021, ISSN: 1569-8432. Abstract | Links | BibTeX | Tags: @article{Abid2021, title = {UCL: Unsupervised Curriculum Learning for water body classification from remote sensing imagery}, author = {Nosheen Abid and Muhammad Shahzad and Muhammad Imran Malik and Ulrich Schwanecke and Adrian Ulges and György Kovács and Faisal Shafait}, url = {https://www.sciencedirect.com/science/article/pii/S0303243421002750}, doi = {https://doi.org/10.1016/j.jag.2021.102568}, issn = {1569-8432}, year = {2021}, date = {2021-01-01}, journal = {International Journal of Applied Earth Observation and Geoinformation}, volume = {105}, pages = {102568}, abstract = {This paper presents a Convolutional Neural Networks (CNN) based Unsupervised Curriculum Learning approach for the recognition of water bodies to overcome the stated challenges for remote sensing based RGB imagery. The unsupervised nature of the presented algorithm eliminates the need for labelled training data. The problem is cast as a two class clustering problem (water and non-water), while clustering is done on deep features obtained by a pre-trained CNN. After initial clusters have been identified, representative samples from each cluster are chosen by the unsupervised curriculum learning algorithm for fine-tuning the feature extractor. The stated process is repeated iteratively until convergence. Three datasets have been used to evaluate the approach and show its effectiveness on varying scales: (i) SAT-6 dataset comprising high resolution aircraft images, (ii) Sentinel-2 of EuroSAT, comprising remote sensing images with low resolution, and (iii) PakSAT, a new dataset we created for this study. PakSAT is the first Pakistani Sentinel-2 dataset designed to classify water bodies of Pakistan. Extensive experiments on these datasets demonstrate the progressive learning behaviour of UCL and reported promising results of water classification on all three datasets. The obtained accuracies outperform the supervised methods in domain adaptation, demonstrating the effectiveness of the proposed algorithm.}, keywords = {}, pubstate = {published}, tppubtype = {article} } This paper presents a Convolutional Neural Networks (CNN) based Unsupervised Curriculum Learning approach for the recognition of water bodies to overcome the stated challenges for remote sensing based RGB imagery. The unsupervised nature of the presented algorithm eliminates the need for labelled training data. The problem is cast as a two class clustering problem (water and non-water), while clustering is done on deep features obtained by a pre-trained CNN. After initial clusters have been identified, representative samples from each cluster are chosen by the unsupervised curriculum learning algorithm for fine-tuning the feature extractor. The stated process is repeated iteratively until convergence. Three datasets have been used to evaluate the approach and show its effectiveness on varying scales: (i) SAT-6 dataset comprising high resolution aircraft images, (ii) Sentinel-2 of EuroSAT, comprising remote sensing images with low resolution, and (iii) PakSAT, a new dataset we created for this study. PakSAT is the first Pakistani Sentinel-2 dataset designed to classify water bodies of Pakistan. Extensive experiments on these datasets demonstrate the progressive learning behaviour of UCL and reported promising results of water classification on all three datasets. The obtained accuracies outperform the supervised methods in domain adaptation, demonstrating the effectiveness of the proposed algorithm. | |

Mughal, Muhammad Hamza; Khokhar, Muhammad Jawad; Shahzad, Muhammad Assisting UAV Localization Via Deep Contextual Image Matching Journal Article IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 14 , pp. 2445-2457, 2021. @article{9336232, title = {Assisting UAV Localization Via Deep Contextual Image Matching}, author = {Muhammad Hamza Mughal and Muhammad Jawad Khokhar and Muhammad Shahzad}, doi = {10.1109/JSTARS.2021.3054832}, year = {2021}, date = {2021-01-01}, journal = {IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing}, volume = {14}, pages = {2445-2457}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Usmani, Fehmida; Khan, Ihtesham; Masood, Muhammad Umar; Ahmad, Arsalan; Shahzad, Muhammad; Curri, Vittorio Convolutional neural network for quality of transmission prediction of unestablished lightpaths Journal Article Microwave and Optical Technology Letters, 63 (10), pp. 2461-2469, 2021. Abstract | Links | BibTeX | Tags: @article{https://doi.org/10.1002/mop.32996, title = {Convolutional neural network for quality of transmission prediction of unestablished lightpaths}, author = {Fehmida Usmani and Ihtesham Khan and Muhammad Umar Masood and Arsalan Ahmad and Muhammad Shahzad and Vittorio Curri}, url = {https://onlinelibrary.wiley.com/doi/abs/10.1002/mop.32996}, doi = {https://doi.org/10.1002/mop.32996}, year = {2021}, date = {2021-01-01}, journal = {Microwave and Optical Technology Letters}, volume = {63}, number = {10}, pages = {2461-2469}, abstract = {Abstract With the advancement in evolving concepts of software-defined networks and elastic-optical-network, the number of design parameters is growing dramatically, making the lightpath (LP) deployment more complex. Typically, worst-case assumptions are utilized to calculate the quality-of-transmission (QoT) with the provisioning of high-margin requirements. To this aim, precise and advanced estimation of the QoT of the LP is essential for reducing this provisioning margin. In this investigation, we present convolutional-neural-networks (CNN) based architecture to accurately calculate QoT before the actual deployment of LP in an unseen network. The proposed model is trained on the data acquired from already established LP of a completely different network. The metric considered to evaluate the QoT of LP is the generalized signal-to-noise ratio (GSNR). The synthetic dataset is generated by utilizing well appraised GNPy simulation tool. Promising results are achieved, showing that the proposed CNN model considerably minimizes the GSNR uncertainty and, consequently, the provisioning margin.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Abstract With the advancement in evolving concepts of software-defined networks and elastic-optical-network, the number of design parameters is growing dramatically, making the lightpath (LP) deployment more complex. Typically, worst-case assumptions are utilized to calculate the quality-of-transmission (QoT) with the provisioning of high-margin requirements. To this aim, precise and advanced estimation of the QoT of the LP is essential for reducing this provisioning margin. In this investigation, we present convolutional-neural-networks (CNN) based architecture to accurately calculate QoT before the actual deployment of LP in an unseen network. The proposed model is trained on the data acquired from already established LP of a completely different network. The metric considered to evaluate the QoT of LP is the generalized signal-to-noise ratio (GSNR). The synthetic dataset is generated by utilizing well appraised GNPy simulation tool. Promising results are achieved, showing that the proposed CNN model considerably minimizes the GSNR uncertainty and, consequently, the provisioning margin. | |

Zulfiqar, Annus; Ghaffar, Muhammad M; Shahzad, Muhammad; Weis, Christian; Malik, Muhammad I; Shafait, Faisal; Wehn, Norbert Journal of Applied Remote Sensing, 15 (2), pp. 1 – 21, 2021. Abstract | Links | BibTeX | Tags: @article{Zulfiqar2021, title = {AI-ForestWatch: semantic segmentation based end-to-end framework for forest estimation and change detection using multi-spectral remote sensing imagery}, author = {Annus Zulfiqar and Muhammad M Ghaffar and Muhammad Shahzad and Christian Weis and Muhammad I Malik and Faisal Shafait and Norbert Wehn}, url = {https://doi.org/10.1117/1.JRS.15.024518}, doi = {10.1117/1.JRS.15.024518}, year = {2021}, date = {2021-01-01}, journal = {Journal of Applied Remote Sensing}, volume = {15}, number = {2}, pages = {1 -- 21}, publisher = {SPIE}, abstract = {Forest change detection is crucial for sustainable forest management. The changes in the forest area due to deforestation (such as wild fires or logging due to development activities) or afforestation alter the total forest area. Additionally, it impacts the available stock for commercial purposes, climate change due to carbon emissions, and biodiversity of the forest habitat estimations, which are essential for disaster management and policy making. In recent years, foresters have relied on hand-crafted features or bi-temporal change detection methods to detect change in the remote sensing imagery to estimate the forest area. Due to manual processing steps, these methods are fragile and prone to errors and can generate inaccurate (i.e., under or over) segmentation results. In contrast to traditional methods, we present AI-ForestWatch, an end to end framework for forest estimation and change analysis. The proposed approach uses deep convolution neural network-based semantic segmentation to process multi-spectral space-borne images to quantitatively monitor the forest cover change patterns by automatically extracting features from the dataset. Our analysis is completely data driven and has been performed using extended (with vegetation indices) Landsat-8 multi-spectral imagery from 2014 to 2020. As a case study, we estimated the forest area in 15 districts of Pakistan and generated forest change maps from 2014 to 2020, where major afforestation activity is carried out during this period. Our critical analysis shows an improvement of forest cover in 14 out of 15 districts. The AI-ForestWatch framework along with the associated dataset will be made public upon publication so that it can be adapted by other countries or regions.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Forest change detection is crucial for sustainable forest management. The changes in the forest area due to deforestation (such as wild fires or logging due to development activities) or afforestation alter the total forest area. Additionally, it impacts the available stock for commercial purposes, climate change due to carbon emissions, and biodiversity of the forest habitat estimations, which are essential for disaster management and policy making. In recent years, foresters have relied on hand-crafted features or bi-temporal change detection methods to detect change in the remote sensing imagery to estimate the forest area. Due to manual processing steps, these methods are fragile and prone to errors and can generate inaccurate (i.e., under or over) segmentation results. In contrast to traditional methods, we present AI-ForestWatch, an end to end framework for forest estimation and change analysis. The proposed approach uses deep convolution neural network-based semantic segmentation to process multi-spectral space-borne images to quantitatively monitor the forest cover change patterns by automatically extracting features from the dataset. Our analysis is completely data driven and has been performed using extended (with vegetation indices) Landsat-8 multi-spectral imagery from 2014 to 2020. As a case study, we estimated the forest area in 15 districts of Pakistan and generated forest change maps from 2014 to 2020, where major afforestation activity is carried out during this period. Our critical analysis shows an improvement of forest cover in 14 out of 15 districts. The AI-ForestWatch framework along with the associated dataset will be made public upon publication so that it can be adapted by other countries or regions. | |

Rizvi, Syed; Aslam, Warda; Shahzad, Muhammad; Fraz, Muhammad PROUD-MAL: static analysis-based progressive framework for deep unsupervised malware classification of windows portable executable Journal Article Complex & Intelligent Systems, pp. 1-13, 2021. @article{articleb, title = {PROUD-MAL: static analysis-based progressive framework for deep unsupervised malware classification of windows portable executable}, author = {Syed Rizvi and Warda Aslam and Muhammad Shahzad and Muhammad Fraz}, doi = {10.1007/s40747-021-00560-1}, year = {2021}, date = {2021-01-01}, journal = {Complex & Intelligent Systems}, pages = {1-13}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Nouman, Ahmed; Saha, Sudipan; Shahzad, Muhammad; Fraz, Muhammad Moazam; Zhu, Xiao Xiang Progressive Unsupervised Deep Transfer Learning for Forest Mapping in Satellite Image Inproceedings International Conference on Computer Vision (ICCV), pp. 752–761, 2021. Abstract | Links | BibTeX | Tags: @inproceedings{dlr145759, title = {Progressive Unsupervised Deep Transfer Learning for Forest Mapping in Satellite Image}, author = {Ahmed Nouman and Sudipan Saha and Muhammad Shahzad and Muhammad Moazam Fraz and Xiao Xiang Zhu}, url = {https://elib.dlr.de/145759/}, year = {2021}, date = {2021-01-01}, booktitle = {International Conference on Computer Vision (ICCV)}, journal = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, pages = {752--761}, abstract = {Automated forest mapping is important to understand our forests that play a key role in ecological system. However, efforts towards forest mapping is impeded by difficulty to collect labeled forest images that show large intraclass variation. Recently unsupervised learning has shown promising capability when exploiting limited labeled data. Motivated by this, we propose a progressive unsupervised deep transfer learning method for forest mapping. The proposed method exploits a pre-trained model that is subsequently fine-tuned over the target forest domain. We propose two different fine-tuning echanism, one works in a totally unsupervised setting by jointly learning the parameters of CNN and the k-means based cluster assignments of the resulting features and the other one works in a semi-supervised setting by exploiting the extracted k-nearest neighbor based pseudo labels. The proposed progressive scheme is evaluated on publicly available EuroSAT dataset using the relevant base model trained on BigEarth-Net labels. The results show that the proposed method greatly improves the forest regions classification accuracy as compared to the unsupervised baseline, nearly approaching the supervised classification approach.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Automated forest mapping is important to understand our forests that play a key role in ecological system. However, efforts towards forest mapping is impeded by difficulty to collect labeled forest images that show large intraclass variation. Recently unsupervised learning has shown promising capability when exploiting limited labeled data. Motivated by this, we propose a progressive unsupervised deep transfer learning method for forest mapping. The proposed method exploits a pre-trained model that is subsequently fine-tuned over the target forest domain. We propose two different fine-tuning echanism, one works in a totally unsupervised setting by jointly learning the parameters of CNN and the k-means based cluster assignments of the resulting features and the other one works in a semi-supervised setting by exploiting the extracted k-nearest neighbor based pseudo labels. The proposed progressive scheme is evaluated on publicly available EuroSAT dataset using the relevant base model trained on BigEarth-Net labels. The results show that the proposed method greatly improves the forest regions classification accuracy as compared to the unsupervised baseline, nearly approaching the supervised classification approach. | |

Abid, Nosheen; Malik, Muhammad Imran; Shahzad, Muhammad; Shafait, Faisal; Ali, Haider; Ghaffar, Muhammad Mohsin; Weis, Christian; Wehn, Norbert; Liwicki, Marcus Burnt Forest Estimation from Sentinel-2 Imagery of Australia using Unsupervised Deep Learning Inproceedings 2021 Digital Image Computing: Techniques and Applications (DICTA), pp. 01-08, 2021. @inproceedings{9647174, title = {Burnt Forest Estimation from Sentinel-2 Imagery of Australia using Unsupervised Deep Learning}, author = {Nosheen Abid and Muhammad Imran Malik and Muhammad Shahzad and Faisal Shafait and Haider Ali and Muhammad Mohsin Ghaffar and Christian Weis and Norbert Wehn and Marcus Liwicki}, doi = {10.1109/DICTA52665.2021.9647174}, year = {2021}, date = {2021-01-01}, booktitle = {2021 Digital Image Computing: Techniques and Applications (DICTA)}, pages = {01-08}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Javed, Muhammad Gohar; Raza, Minahil; Ghaffar, Muhammad Mohsin; Weis, Christian; Wehn, Norbert; Shahzad, Muhammad; Shafait, Faisal QuantYOLO: A High-Throughput and Power-Efficient Object Detection Network for Resource and Power Constrained UAVs Inproceedings 2021 Digital Image Computing: Techniques and Applications (DICTA), pp. 01-08, 2021. @inproceedings{9647121, title = {QuantYOLO: A High-Throughput and Power-Efficient Object Detection Network for Resource and Power Constrained UAVs}, author = {Muhammad Gohar Javed and Minahil Raza and Muhammad Mohsin Ghaffar and Christian Weis and Norbert Wehn and Muhammad Shahzad and Faisal Shafait}, doi = {10.1109/DICTA52665.2021.9647121}, year = {2021}, date = {2021-01-01}, booktitle = {2021 Digital Image Computing: Techniques and Applications (DICTA)}, pages = {01-08}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } | |

Saha, Sudipan; Mou, Lichao; Shahzad, Muhammad; Zhu, Xiao Xiang Segmentation of VHR EO Images using Unsupervised Learning Inproceedings arXiv, 2021, ISSN: 16130073. Abstract | Links | BibTeX | Tags: @inproceedings{Saha2021i, title = {Segmentation of VHR EO Images using Unsupervised Learning}, author = {Sudipan Saha and Lichao Mou and Muhammad Shahzad and Xiao Xiang Zhu}, url = {https://arxiv.org/abs/2108.04222v2}, doi = {10.48550/arxiv.2108.04222}, issn = {16130073}, year = {2021}, date = {2021-01-01}, journal = {ECML PKDD 2021 workshop Machine Learning for Earth Observation (MACLEAN)}, publisher = {arXiv}, abstract = {Semantic segmentation is a crucial step in many Earth observation tasks. Large quantity of pixel-level annotation is required to train deep networks for semantic segmentation. Earth observation techniques are applied to varieties of applications and since classes vary widely depending on the applications, therefore, domain knowledge is often required to label Earth observation images, impeding availability of labeled training data in many Earth observation applications. To tackle these challenges, in this paper we propose an unsupervised semantic segmentation method that can be trained using just a single unlabeled scene. Remote sensing scenes are generally large. The proposed method exploits this property to sample smaller patches from the larger scene and uses deep clustering and contrastive learning to refine the weights of a lightweight deep model composed of a series of the convolution layers along with an embedded channel attention. After unsupervised training on the target image/scene, the model automatically segregates the major classes present in the scene and produces the segmentation map. Experimental results on the Vaihingen dataset demonstrate the efficacy of the proposed method.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Semantic segmentation is a crucial step in many Earth observation tasks. Large quantity of pixel-level annotation is required to train deep networks for semantic segmentation. Earth observation techniques are applied to varieties of applications and since classes vary widely depending on the applications, therefore, domain knowledge is often required to label Earth observation images, impeding availability of labeled training data in many Earth observation applications. To tackle these challenges, in this paper we propose an unsupervised semantic segmentation method that can be trained using just a single unlabeled scene. Remote sensing scenes are generally large. The proposed method exploits this property to sample smaller patches from the larger scene and uses deep clustering and contrastive learning to refine the weights of a lightweight deep model composed of a series of the convolution layers along with an embedded channel attention. After unsupervised training on the target image/scene, the model automatically segregates the major classes present in the scene and produces the segmentation map. Experimental results on the Vaihingen dataset demonstrate the efficacy of the proposed method. | |

Baumhoer, Celia; Dietz, Andreas; Heidler, Konrad; Mou, LiChao; Zhu, Xiao Xiang; ü, Claudia K Towards an Antarctic Ice Shelf and Glacier Front Monitoring Service based on Sentinel-1 Data Inproceedings UK Antarctic Science Conference 2021, 2021. Abstract | Links | BibTeX | Tags: @inproceedings{dlr141526, title = {Towards an Antarctic Ice Shelf and Glacier Front Monitoring Service based on Sentinel-1 Data}, author = {Celia Baumhoer and Andreas Dietz and Konrad Heidler and LiChao Mou and Xiao Xiang Zhu and Claudia K ü}, url = {https://elib.dlr.de/141526/}, year = {2021}, date = {2021-00-01}, booktitle = {UK Antarctic Science Conference 2021}, abstract = {The extent of Antarctic ice shelves and glacier tongues is important for accurate sea level rise predictions. If ice shelf areas with strong buttressing effects are lost the ice discharge of the Antarctic Ice Sheet increases. Therefore, it is crucial to monitor ice shelf front positions and keep track of glacier and ice shelf front dynamics. The German Aerospace Center (DLR) is currently facilitating a project aiming to bring together the expertise in polar research: The Polar Monitor Project. Within this project, we are establishing an automated calving front monitoring service providing monthly Ice Shelf and Glacier Front Time Series (IceLines) via the EOC GeoService hosted by DLR. IceLines is based on a deep learning architecture combining segmentation and edge detection optimized for calving front extraction. The monthly front positions are extracted from Sentinel-1 SAR data over the Antarctic coastline. We will outline the implementation of IceLines within the DLR processing infrastructure, present first results and highlight the potential future usage for the scientific community. Future implementations within the Polar Monitor Project will also provide access to daily global snow cover (GlobalSnowPack) and the Antarctic grounding line derived from Sentinel-1 imagery.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } The extent of Antarctic ice shelves and glacier tongues is important for accurate sea level rise predictions. If ice shelf areas with strong buttressing effects are lost the ice discharge of the Antarctic Ice Sheet increases. Therefore, it is crucial to monitor ice shelf front positions and keep track of glacier and ice shelf front dynamics. The German Aerospace Center (DLR) is currently facilitating a project aiming to bring together the expertise in polar research: The Polar Monitor Project. Within this project, we are establishing an automated calving front monitoring service providing monthly Ice Shelf and Glacier Front Time Series (IceLines) via the EOC GeoService hosted by DLR. IceLines is based on a deep learning architecture combining segmentation and edge detection optimized for calving front extraction. The monthly front positions are extracted from Sentinel-1 SAR data over the Antarctic coastline. We will outline the implementation of IceLines within the DLR processing infrastructure, present first results and highlight the potential future usage for the scientific community. Future implementations within the Polar Monitor Project will also provide access to daily global snow cover (GlobalSnowPack) and the Antarctic grounding line derived from Sentinel-1 imagery. | |

| Kochupillai, M Outline of a Novel Approach for Indentifying Ethical Issues in Early Stages of AI4EO Research,Conference paper for the IEEE’s International Geoscience and Remote Sensing Symposium (IGARSS) Conference 2021. BibTeX | Tags: @conference{RN2472, title = {Outline of a Novel Approach for Indentifying Ethical Issues in Early Stages of AI4EO Research,Conference paper for the IEEE’s International Geoscience and Remote Sensing Symposium (IGARSS)}, author = {M Kochupillai}, year = {2021}, date = {2021-00-00}, journal = {Conference paper for the IEEE’s International Geoscience and Remote Sensing Symposium (IGARSS),}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

| Hua, Y; Mou, L; Jin, P; Zhu, X X MultiScene: A Large-scale Dataset and Benchmark for Multi-scene Recognition in Single Aerial Images Journal Article IEEE Transactions on Geoscience and Remote Sensing, 2021. BibTeX | Tags: @article{hua2021multiscene, title = {MultiScene: A Large-scale Dataset and Benchmark for Multi-scene Recognition in Single Aerial Images}, author = {Y Hua and L Mou and P Jin and X X Zhu}, year = {2021}, date = {2021-00-00}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

Kochupillai, Mrinalini Outline of a Novel Approach for Identifying Ethical Issues in Early Stages of AI4EO Research Conference 2021. @conference{Kochupillai2021, title = {Outline of a Novel Approach for Identifying Ethical Issues in Early Stages of AI4EO Research}, author = {Mrinalini Kochupillai}, url = {https://igarss2021.com/view_paper.php?PaperNum=1525}, year = {2021}, date = {2021-00-00}, journal = {Conference paper for the IEEE’s International Geoscience and Remote Sensing Symposium (IGARSS),}, keywords = {}, pubstate = {published}, tppubtype = {conference} } | |

2020 |

|

| Mou, Lichao; Hua, Yuansheng; Jin, Pu; Zhu, Xiao Xiang ERA: A dataset and deep learning benchmark for event recognition in aerial videos Journal Article IEEE Geoscience and Remote Sensing Magazine, 2020, (in press). BibTeX | Tags: @article{Mou2020, title = {ERA: A dataset and deep learning benchmark for event recognition in aerial videos}, author = {Lichao Mou and Yuansheng Hua and Pu Jin and Xiao Xiang Zhu}, year = {2020}, date = {2020-01-01}, journal = {IEEE Geoscience and Remote Sensing Magazine}, note = {in press}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

| Mou, Lichao; Hua, Yuansheng; Zhu, Xiao Xiang Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high resolution aerial images Journal Article IEEE Transactions on Geoscience and Remote Sensing, 2020, (in press). BibTeX | Tags: @article{Mou2020a, title = {Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high resolution aerial images}, author = {Lichao Mou and Yuansheng Hua and Xiao Xiang Zhu}, year = {2020}, date = {2020-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, note = {in press}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

| Hua, Yuansheng; Mou, Lichao; Zhu, Xiao Xiang Relation network for multilabel aerial image classification Journal Article IEEE Transactions on Geoscience and Remote Sensing, 58 (7), pp. 4558-4572, 2020. BibTeX | Tags: @article{Hua2020, title = {Relation network for multilabel aerial image classification}, author = {Yuansheng Hua and Lichao Mou and Xiao Xiang Zhu}, year = {2020}, date = {2020-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, volume = {58}, number = {7}, pages = {4558-4572}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

| Rußwurm, Marc; Ali, Mohsin; Zhu, Xiaoxiang; Gal, Yarin; Körner, Marco Model and Data Uncertainty for Satellite Time Series Forecasting with Deep Recurrent Models Inproceedings IGARSS 2020 IEEE International Geoscience and Remote Sensing Symposium, IEEE 2020. BibTeX | Tags: @inproceedings{russwurm2020c, title = {Model and Data Uncertainty for Satellite Time Series Forecasting with Deep Recurrent Models}, author = {Marc Rußwurm and Mohsin Ali and Xiaoxiang Zhu and Yarin Gal and Marco Körner}, year = {2020}, date = {2020-01-01}, booktitle = {IGARSS 2020 IEEE International Geoscience and Remote Sensing Symposium}, organization = {IEEE}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } |

| Kochupillai, Mrinalini; Lütge, Christoph; Poszler, Franziska Programming Away Human Rights and Responsibilities?“The Moral Machine Experiment” and the Need for a More “Humane” AV Future Journal Article NanoEthics, 14 (3), pp. 285-299, 2020, ISSN: 1871-4765. BibTeX | Tags: @article{RN2469, title = {Programming Away Human Rights and Responsibilities?“The Moral Machine Experiment” and the Need for a More “Humane” AV Future}, author = {Mrinalini Kochupillai and Christoph Lütge and Franziska Poszler}, issn = {1871-4765}, year = {2020}, date = {2020-01-01}, journal = {NanoEthics}, volume = {14}, number = {3}, pages = {285-299}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

| Brinkmann, Johannes; Kochupillai, Mrinalini Law, Business, and Legitimacy Book Chapter pp. 489-507, 2020, ISSN: 3030146219. BibTeX | Tags: @inbook{RN2470, title = {Law, Business, and Legitimacy}, author = {Johannes Brinkmann and Mrinalini Kochupillai}, issn = {3030146219}, year = {2020}, date = {2020-01-01}, journal = {Handbook of Business Legitimacy: Responsibility, Ethics and Society}, pages = {489-507}, keywords = {}, pubstate = {published}, tppubtype = {inbook} } |

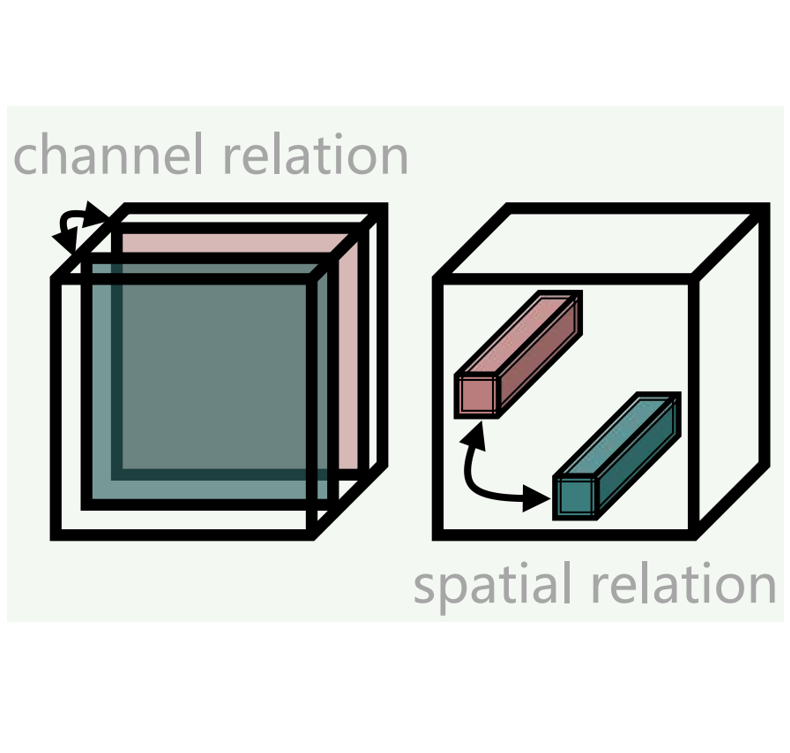

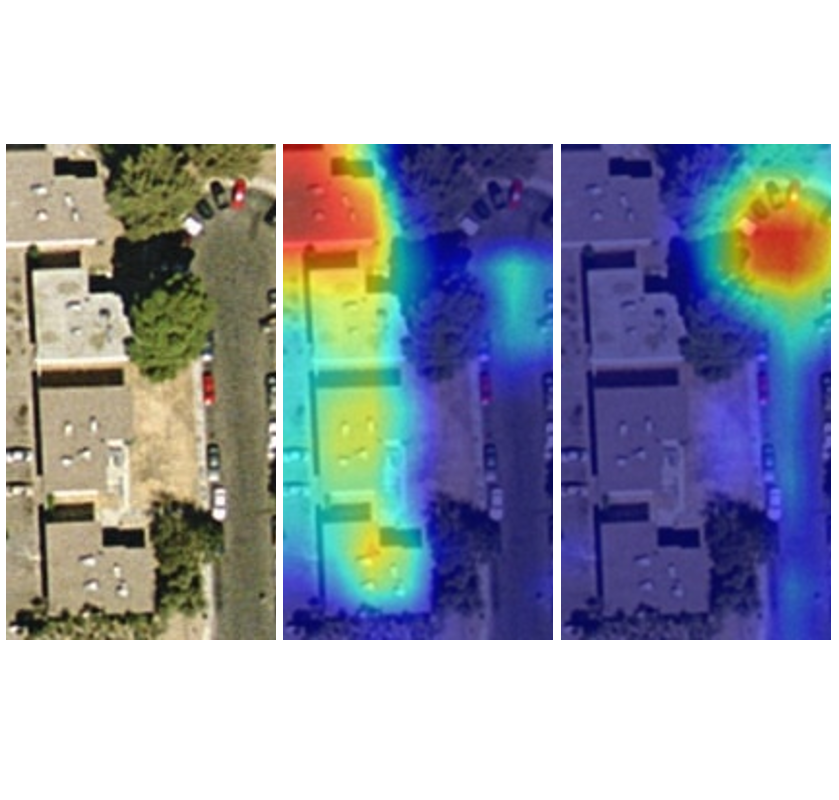

Mou, Lichao; Hua, Yuansheng; Zhu, Xiao Xiang Relation Matters: Relational Context-Aware Fully Convolutional Network for Semantic Segmentation of High-Resolution Aerial Images Journal Article IEEE Transactions on Geoscience and Remote Sensing, 58 , pp. 7557-7569, 2020, ISSN: 15580644, (in press). Abstract | Links | BibTeX | Tags: @article{Mou2020c, title = {Relation Matters: Relational Context-Aware Fully Convolutional Network for Semantic Segmentation of High-Resolution Aerial Images}, author = {Lichao Mou and Yuansheng Hua and Xiao Xiang Zhu}, doi = {10.1109/TGRS.2020.2979552}, issn = {15580644}, year = {2020}, date = {2020-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, volume = {58}, pages = {7557-7569}, publisher = {Institute of Electrical and Electronics Engineers Inc.}, abstract = {Most current semantic segmentation approaches fall back on deep convolutional neural networks (CNNs). However, their use of convolution operations with local receptive fields causes failures in modeling contextual spatial relations. Prior works have sought to address this issue by using graphical models or spatial propagation modules in networks. But such models often fail to capture long-range spatial relationships between entities, which leads to spatially fragmented predictions. Moreover, recent works have demonstrated that channel-wise information also acts a pivotal part in CNNs. In this article, we introduce two simple yet effective network units, the spatial relation module, and the channel relation module to learn and reason about global relationships between any two spatial positions or feature maps, and then produce Relation-Augmented (RA) feature representations. The spatial and channel relation modules are general and extensible, and can be used in a plug-and-play fashion with the existing fully convolutional network (FCN) framework. We evaluate relation module-equipped networks on semantic segmentation tasks using two aerial image data sets, namely International Society for Photogrammetry and Remote Sensing (ISPRS) Vaihingen and Potsdam data sets, which fundamentally depend on long-range spatial relational reasoning. The networks achieve very competitive results, a mean score of 88.54% on the Vaihingen data set and a mean score of 88.01% on the Potsdam data set, bringing significant improvements over baselines.}, note = {in press}, keywords = {}, pubstate = {published}, tppubtype = {article} } Most current semantic segmentation approaches fall back on deep convolutional neural networks (CNNs). However, their use of convolution operations with local receptive fields causes failures in modeling contextual spatial relations. Prior works have sought to address this issue by using graphical models or spatial propagation modules in networks. But such models often fail to capture long-range spatial relationships between entities, which leads to spatially fragmented predictions. Moreover, recent works have demonstrated that channel-wise information also acts a pivotal part in CNNs. In this article, we introduce two simple yet effective network units, the spatial relation module, and the channel relation module to learn and reason about global relationships between any two spatial positions or feature maps, and then produce Relation-Augmented (RA) feature representations. The spatial and channel relation modules are general and extensible, and can be used in a plug-and-play fashion with the existing fully convolutional network (FCN) framework. We evaluate relation module-equipped networks on semantic segmentation tasks using two aerial image data sets, namely International Society for Photogrammetry and Remote Sensing (ISPRS) Vaihingen and Potsdam data sets, which fundamentally depend on long-range spatial relational reasoning. The networks achieve very competitive results, a mean score of 88.54% on the Vaihingen data set and a mean score of 88.01% on the Potsdam data set, bringing significant improvements over baselines. | |

Kochupillai, Mrinalini; Lütge, Christoph; Poszler, Franziska Programming Away Human Rights and Responsibilities? “The Moral Machine Experiment” and the Need for a More “Humane” AV Future Journal Article NanoEthics, 14 (3), pp. 285-299, 2020, ISSN: 18714765. Abstract | Links | BibTeX | Tags: @article{Kochupillai2020, title = {Programming Away Human Rights and Responsibilities? “The Moral Machine Experiment” and the Need for a More “Humane” AV Future}, author = {Mrinalini Kochupillai and Christoph Lütge and Franziska Poszler}, url = {https://link.springer.com/article/10.1007/s11569-020-00374-4}, doi = {10.1007/S11569-020-00374-4/TABLES/2}, issn = {18714765}, year = {2020}, date = {2020-01-01}, journal = {NanoEthics}, volume = {14}, number = {3}, pages = {285-299}, publisher = {Springer Science and Business Media Deutschland GmbH}, abstract = {Dilemma situations involving the choice of which human life to save in the case of unavoidable accidents are expected to arise only rarely in the context of autonomous vehicles (AVs). Nonetheless, the scientific community has devoted significant attention to finding appropriate and (socially) acceptable automated decisions in the event that AVs or drivers of AVs were indeed to face such situations. Awad and colleagues, in their now famous paper “The Moral Machine Experiment”, used a “multilingual online ‘serious game’ for collecting large-scale data on how citizens would want AVs to solve moral dilemmas in the context of unavoidable accidents.” Awad and colleagues undoubtedly collected an impressive and philosophically useful data set of armchair intuitions. However, we argue that applying their findings to the development of “global, socially acceptable principles for machine learning” would violate basic tenets of human rights law and fundamental principles of human dignity. To make its arguments, our paper cites principles of tort law, relevant case law, provisions from the Universal Declaration of Human Rights, and rules from the German Ethics Code for Autonomous and Connected Driving.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Dilemma situations involving the choice of which human life to save in the case of unavoidable accidents are expected to arise only rarely in the context of autonomous vehicles (AVs). Nonetheless, the scientific community has devoted significant attention to finding appropriate and (socially) acceptable automated decisions in the event that AVs or drivers of AVs were indeed to face such situations. Awad and colleagues, in their now famous paper “The Moral Machine Experiment”, used a “multilingual online ‘serious game’ for collecting large-scale data on how citizens would want AVs to solve moral dilemmas in the context of unavoidable accidents.” Awad and colleagues undoubtedly collected an impressive and philosophically useful data set of armchair intuitions. However, we argue that applying their findings to the development of “global, socially acceptable principles for machine learning” would violate basic tenets of human rights law and fundamental principles of human dignity. To make its arguments, our paper cites principles of tort law, relevant case law, provisions from the Universal Declaration of Human Rights, and rules from the German Ethics Code for Autonomous and Connected Driving. | |

Brinkmann, Johannes; Kochupillai, Mrinalini Law, Business, and Legitimacy Book Chapter pp. 489-507, Elsevier BV, 2020, ISSN: 3030146219. @inbook{Brinkmann2020, title = {Law, Business, and Legitimacy}, author = {Johannes Brinkmann and Mrinalini Kochupillai}, url = {https://papers.ssrn.com/abstract=3373565}, doi = {10.2139/SSRN.3373565}, issn = {3030146219}, year = {2020}, date = {2020-01-01}, journal = {Handbook of Business Legitimacy: Responsibility, Ethics and Society}, pages = {489-507}, publisher = {Elsevier BV}, keywords = {}, pubstate = {published}, tppubtype = {inbook} } | |

Mou, Lichao; Hua, Yuansheng; Jin, Pu; Zhu, Xiao Xiang; Member, Senior ERA: A Dataset and Deep Learning Benchmark for Event Recognition in Aerial Videos Journal Article IEEE Geoscience and Remote Sensing Magazine, 2020, (in press). Abstract | Links | BibTeX | Tags: @article{Mou2020d, title = {ERA: A Dataset and Deep Learning Benchmark for Event Recognition in Aerial Videos}, author = {Lichao Mou and Yuansheng Hua and Pu Jin and Xiao Xiang Zhu and Senior Member}, url = {https://arxiv.org/abs/2001.11394v4}, doi = {10.48550/arxiv.2001.11394}, year = {2020}, date = {2020-01-01}, journal = {IEEE Geoscience and Remote Sensing Magazine}, abstract = {Along with the increasing use of unmanned aerial vehicles (UAVs), large volumes of aerial videos have been produced. It is unrealistic for humans to screen such big data and understand their contents. Hence methodological research on the automatic understanding of UAV videos is of paramount importance. In this paper, we introduce a novel problem of event recognition in unconstrained aerial videos in the remote sensing community and present a large-scale, human-annotated dataset, named ERA (Event Recognition in Aerial videos), consisting of 2,864 videos each with a label from 25 different classes corresponding to an event unfolding 5 seconds. The ERA dataset is designed to have a significant intra-class variation and inter-class similarity and captures dynamic events in various circumstances and at dramatically various scales. Moreover, to offer a benchmark for this task, we extensively validate existing deep networks. We expect that the ERA dataset will facilitate further progress in automatic aerial video comprehension. The website is https://lcmou.github.io/ERA_Dataset/}, note = {in press}, keywords = {}, pubstate = {published}, tppubtype = {article} } Along with the increasing use of unmanned aerial vehicles (UAVs), large volumes of aerial videos have been produced. It is unrealistic for humans to screen such big data and understand their contents. Hence methodological research on the automatic understanding of UAV videos is of paramount importance. In this paper, we introduce a novel problem of event recognition in unconstrained aerial videos in the remote sensing community and present a large-scale, human-annotated dataset, named ERA (Event Recognition in Aerial videos), consisting of 2,864 videos each with a label from 25 different classes corresponding to an event unfolding 5 seconds. The ERA dataset is designed to have a significant intra-class variation and inter-class similarity and captures dynamic events in various circumstances and at dramatically various scales. Moreover, to offer a benchmark for this task, we extensively validate existing deep networks. We expect that the ERA dataset will facilitate further progress in automatic aerial video comprehension. The website is https://lcmou.github.io/ERA_Dataset/ | |

Behrens, Freya; Sauder, Jonathan; Jung, Peter Neurally Augmented ALISTA Journal Article 2020. Abstract | Links | BibTeX | Tags: @article{Behrens2020, title = {Neurally Augmented ALISTA}, author = {Freya Behrens and Jonathan Sauder and Peter Jung}, url = {https://arxiv.org/abs/2010.01930v1}, doi = {10.48550/arxiv.2010.01930}, year = {2020}, date = {2020-01-01}, abstract = {It is well-established that many iterative sparse reconstruction algorithms can be unrolled to yield a learnable neural network for improved empirical performance. A prime example is learned ISTA (LISTA) where weights, step sizes and thresholds are learned from training data. Recently, Analytic LISTA (ALISTA) has been introduced, combining the strong empirical performance of a fully learned approach like LISTA, while retaining theoretical guarantees of classical compressed sensing algorithms and significantly reducing the number of parameters to learn. However, these parameters are trained to work in expectation, often leading to suboptimal reconstruction of individual targets. In this work we therefore introduce Neurally Augmented ALISTA, in which an LSTM network is used to compute step sizes and thresholds individually for each target vector during reconstruction. This adaptive approach is theoretically motivated by revisiting the recovery guarantees of ALISTA. We show that our approach further improves empirical performance in sparse reconstruction, in particular outperforming existing algorithms by an increasing margin as the compression ratio becomes more challenging.}, keywords = {}, pubstate = {published}, tppubtype = {article} } It is well-established that many iterative sparse reconstruction algorithms can be unrolled to yield a learnable neural network for improved empirical performance. A prime example is learned ISTA (LISTA) where weights, step sizes and thresholds are learned from training data. Recently, Analytic LISTA (ALISTA) has been introduced, combining the strong empirical performance of a fully learned approach like LISTA, while retaining theoretical guarantees of classical compressed sensing algorithms and significantly reducing the number of parameters to learn. However, these parameters are trained to work in expectation, often leading to suboptimal reconstruction of individual targets. In this work we therefore introduce Neurally Augmented ALISTA, in which an LSTM network is used to compute step sizes and thresholds individually for each target vector during reconstruction. This adaptive approach is theoretically motivated by revisiting the recovery guarantees of ALISTA. We show that our approach further improves empirical performance in sparse reconstruction, in particular outperforming existing algorithms by an increasing margin as the compression ratio becomes more challenging. | |

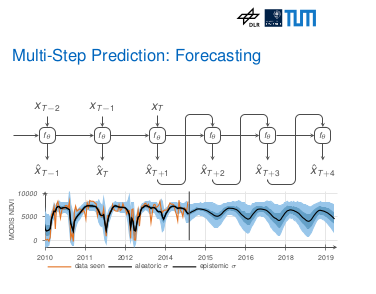

Rußwurm, Marc; Ali, Mohsin; Zhu, Xiaoxiang; Gal, Yarin; Körner, Marco Model and Data Uncertainty for Satellite Time Series Forecasting with Deep Recurrent Models Inproceedings IGARSS 2020 IEEE International Geoscience and Remote Sensing Symposium, pp. 1–4, IEEE 2020. Abstract | Links | BibTeX | Tags: @inproceedings{Russwurm2020b, title = {Model and Data Uncertainty for Satellite Time Series Forecasting with Deep Recurrent Models}, author = {Marc Rußwurm and Mohsin Ali and Xiaoxiang Zhu and Yarin Gal and Marco Körner}, url = {https://elib.dlr.de/139306/}, year = {2020}, date = {2020-01-01}, booktitle = {IGARSS 2020 IEEE International Geoscience and Remote Sensing Symposium}, pages = {1--4}, organization = {IEEE}, abstract = {Deep Learning is often criticized as black-box method which often provides accurate predictions, but limited explanation of the underlying processes and no indication when to not trust those predictions. Equipping existing deep learning models with an (approximate) notion of uncertainty can help mitigate both these issues therefore their use should be known more broadly in the community. The Bayesian deep learning community has developed model-agnostic and easy to-implement methodology to estimate both data and model uncertainty within deep learning models which is hardly applied in the remote sensing community. In this work, we adopt this methodology for deep recurrent satellite time series forecasting, and test its assumptions on data and model uncertainty. We demonstrate its effectiveness on two applications on climate change, and event change detection and outline limitations.}, keywords = {}, pubstate = {published}, tppubtype = {inproceedings} } Deep Learning is often criticized as black-box method which often provides accurate predictions, but limited explanation of the underlying processes and no indication when to not trust those predictions. Equipping existing deep learning models with an (approximate) notion of uncertainty can help mitigate both these issues therefore their use should be known more broadly in the community. The Bayesian deep learning community has developed model-agnostic and easy to-implement methodology to estimate both data and model uncertainty within deep learning models which is hardly applied in the remote sensing community. In this work, we adopt this methodology for deep recurrent satellite time series forecasting, and test its assumptions on data and model uncertainty. We demonstrate its effectiveness on two applications on climate change, and event change detection and outline limitations. | |

2019 |

|

Hua, Yuansheng; Mou, Lichao; Zhu, Xiao Xiang Relation Network for Multi-label Aerial Image Classification Journal Article IEEE Transactions on Geoscience and Remote Sensing, 58 (7), pp. 4558-4572, 2019. Abstract | Links | BibTeX | Tags: @article{Hua2019, title = {Relation Network for Multi-label Aerial Image Classification}, author = {Yuansheng Hua and Lichao Mou and Xiao Xiang Zhu}, url = {http://arxiv.org/abs/1907.07274 http://dx.doi.org/10.1109/TGRS.2019.2963364}, doi = {10.1109/TGRS.2019.2963364}, year = {2019}, date = {2019-01-01}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, volume = {58}, number = {7}, pages = {4558-4572}, publisher = {Institute of Electrical and Electronics Engineers Inc.}, abstract = {Multi-label classification plays a momentous role in perceiving intricate contents of an aerial image and triggers several related studies over the last years. However, most of them deploy few efforts in exploiting label relations, while such dependencies are crucial for making accurate predictions. Although an LSTM layer can be introduced to modeling such label dependencies in a chain propagation manner, the efficiency might be questioned when certain labels are improperly inferred. To address this, we propose a novel aerial image multi-label classification network, attention-aware label relational reasoning network. Particularly, our network consists of three elemental modules: 1) a label-wise feature parcel learning module, 2) an attentional region extraction module, and 3) a label relational inference module. To be more specific, the label-wise feature parcel learning module is designed for extracting high-level label-specific features. The attentional region extraction module aims at localizing discriminative regions in these features and yielding attentional label-specific features. The label relational inference module finally predicts label existences using label relations reasoned from outputs of the previous module. The proposed network is characterized by its capacities of extracting discriminative label-wise features in a proposal-free way and reasoning about label relations naturally and interpretably. In our experiments, we evaluate the proposed model on the UCM multi-label dataset and a newly produced dataset, AID multi-label dataset. Quantitative and qualitative results on these two datasets demonstrate the effectiveness of our model. To facilitate progress in the multi-label aerial image classification, the AID multi-label dataset will be made publicly available.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Multi-label classification plays a momentous role in perceiving intricate contents of an aerial image and triggers several related studies over the last years. However, most of them deploy few efforts in exploiting label relations, while such dependencies are crucial for making accurate predictions. Although an LSTM layer can be introduced to modeling such label dependencies in a chain propagation manner, the efficiency might be questioned when certain labels are improperly inferred. To address this, we propose a novel aerial image multi-label classification network, attention-aware label relational reasoning network. Particularly, our network consists of three elemental modules: 1) a label-wise feature parcel learning module, 2) an attentional region extraction module, and 3) a label relational inference module. To be more specific, the label-wise feature parcel learning module is designed for extracting high-level label-specific features. The attentional region extraction module aims at localizing discriminative regions in these features and yielding attentional label-specific features. The label relational inference module finally predicts label existences using label relations reasoned from outputs of the previous module. The proposed network is characterized by its capacities of extracting discriminative label-wise features in a proposal-free way and reasoning about label relations naturally and interpretably. In our experiments, we evaluate the proposed model on the UCM multi-label dataset and a newly produced dataset, AID multi-label dataset. Quantitative and qualitative results on these two datasets demonstrate the effectiveness of our model. To facilitate progress in the multi-label aerial image classification, the AID multi-label dataset will be made publicly available. | |

0000 |

|

in press. Lin, Lichao Mou Yuansheng Hua Xiao Xiang Zhu Jane Wang Tianze Yu Jianzhe Z SCIDA: Self-Correction Integrated Domain Adaptation from Single- to Multi-label Aerial Images Journal Article IEEE Transactions on Geoscience and Remote Sensing, 0000. BibTeX | Tags: @article{Yuinpress, title = {SCIDA: Self-Correction Integrated Domain Adaptation from Single- to Multi-label Aerial Images}, author = {Lichao Mou Yuansheng Hua Xiao Xiang Zhu Jane Wang Z in press. Tianze Yu Jianzhe Lin}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, keywords = {}, pubstate = {published}, tppubtype = {article} } | |

Kandala, Hitesh ; Saha, Sudipan ; Banerjee, Biplab ; Zhu, Xiao Xiang Exploring Transformer and Multi-label Classification for Remote Sensing Image Captioning Journal Article IEEE Geoscience and Remote Sensing Letters, 0000. @article{Kandala2022, title = {Exploring Transformer and Multi-label Classification for Remote Sensing Image Captioning}, author = {Kandala, Hitesh and Saha, Sudipan and Banerjee, Biplab and Zhu, Xiao Xiang}, journal = {IEEE Geoscience and Remote Sensing Letters}, keywords = {.}, pubstate = {published}, tppubtype = {article} } |